Deploying Zero

To deploy a Zero app, you need to:

- Deploy your backend database. Most standard Postgres hosts work with Zero.

- Deploy

zero-cache. We provide a Docker image that can work with most Docker hosts. - Deploy your frontend. You can use any hosting service like Vercel or Netlify.

This page described how to deploy zero-cache.

Architecture

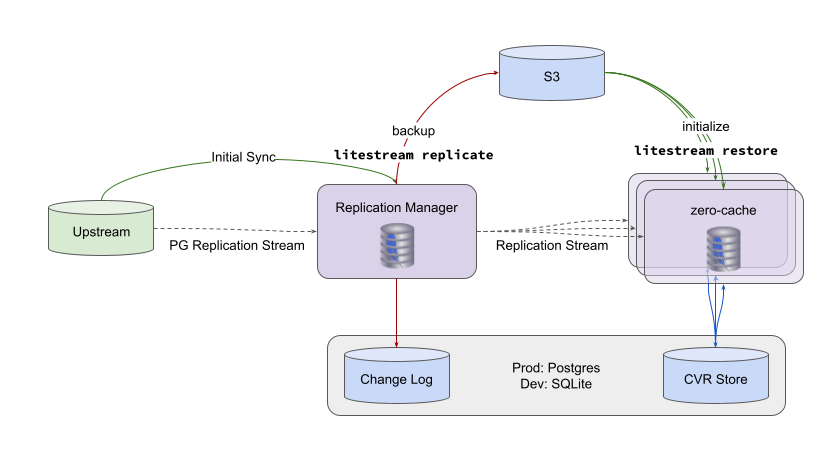

zero-cache is a horizontally scalable, stateful web service that maintains a SQLite replica of your Postgres database. It uses this replica to sync ZQL queries to clients over WebSockets.

You don't have to know the details of how zero-cache works to run it, but it helps to know the basic structure.

A running zero-cache is composed of a single replication-manager node and multiple view-syncer nodes. It also depends on Postgres, S3, and attached SSD storage.

Upstream: Your application's Postgres database.

Change DB: A Postgres DB used by Zero to store a recent subset of the Postgres replication log.

CVR DB: A Postgres DB used by Zero to store Client View Records (CVRs). CVRs track the state of each synced client.

S3: Stores a canonical copy of the SQLite replica.

File System: Used by both node types to store local copies of the SQLite replica. Can be ephemeral – Zero will re-initialize from S3 on startup. Recommended to use attached SSD storage for best performance.

Replication Manager: Serves as the single consumer of the Postgres replication log. Stores a recent subset of the Postgres changelog in the Change DB for catching up ViewSyncers when they initialize. Also maintains the canonical replica, which ViewSyncers initialize from.

View Syncers: Handle WebSocket connections from clients and run ZQL queries. Updates CVR DB with the latest state of each client as queries run. Uses CVR DB on client connection to compute the initial diff to catch clients up.

Topology

You should deploy zero-cache close to your database because the mutation implementation is chatty.

In the future, mutations will move out of zero-cache.

When that happens you can deploy zero-cache geographically distributed and it will double as a read-replica.

Updating

When run with multiple View Syncer nodes, zero-cache supports rolling, downtime-free updates. A new Replication Manager takes over the replication stream from the old Replication Manager, and connections from the old View Syncers are gradually drained and absorbed by active View Syncers.

Configuration

The zero-cache image is configured via environment variables. See zero-cache Config for available options.

Guide: Multi-Node on SST+AWS

SST is our recommended way to deploy Zero.

Setup Upstream

Create an upstream Postgres database server somewhere. See Connecting to Postgres for details. Ensure the upstream, change, and cvr databases are created.

Setup AWS

See AWS setup guide. The end result should be that you have a dev profile and SSO session defined in your ~/.aws/config file.

Initialize SST

npx sst init --yes

Choose "aws" for where to deploy.

Then overwite /sst.config.ts with the following code:

/* eslint-disable */

/// <reference path="./.sst/platform/config.d.ts" />

import { readFileSync } from "fs";

export default $config({

app(input) {

return {

name: "hello-zero",

removal: input?.stage === "production" ? "retain" : "remove",

home: "aws",

region: process.env.AWS_REGION || "us-east-1",

};

},

async run() {

const loadSchemaJson = () => {

if (process.env.ZERO_SCHEMA_JSON) {

return process.env.ZERO_SCHEMA_JSON;

}

try {

const schema = readFileSync("zero-schema.json", "utf8");

// Parse and stringify to ensure single line

return JSON.stringify(JSON.parse(schema));

} catch (error) {

const e = error as Error;

console.error(`Failed to read schema file: ${e.message}`);

throw new Error(

"Schema must be provided via ZERO_SCHEMA_JSON env var or zero-schema.json file"

);

}

};

const schemaJson = loadSchemaJson();

// S3 Bucket

const replicationBucket = new sst.aws.Bucket(`replication-bucket`);

// VPC Configuration

const vpc = new sst.aws.Vpc(`vpc`, {

az: 2,

});

// ECS Cluster

const cluster = new sst.aws.Cluster(`cluster`, {

vpc,

});

const conn = new sst.Secret("PostgresConnectionString");

const zeroAuthSecret = new sst.Secret("ZeroAuthSecret");

// Common environment variables

const commonEnv = {

ZERO_UPSTREAM_DB: conn.value,

ZERO_CVR_DB: conn.value,

ZERO_CHANGE_DB: conn.value,

ZERO_SCHEMA_JSON: schemaJson,

ZERO_AUTH_SECRET: zeroAuthSecret.value,

ZERO_REPLICA_FILE: "sync-replica.db",

ZERO_LITESTREAM_BACKUP_URL: $interpolate`s3://${replicationBucket.name}/backup`,

ZERO_IMAGE_URL: "rocicorp/zero:canary",

ZERO_CVR_MAX_CONNS: "10",

ZERO_UPSTREAM_MAX_CONNS: "10",

};

// Replication Manager Service

const replicationManager = cluster.addService(`replication-manager`, {

cpu: "2 vCPU",

memory: "8 GB",

image: commonEnv.ZERO_IMAGE_URL,

link: [replicationBucket],

health: {

command: ["CMD-SHELL", "curl -f http://localhost:4849/ || exit 1"],

interval: "5 seconds",

retries: 3,

startPeriod: "300 seconds",

},

environment: {

...commonEnv,

ZERO_CHANGE_MAX_CONNS: "3",

ZERO_NUM_SYNC_WORKERS: "0",

},

loadBalancer: {

public: false,

ports: [

{

listen: "80/http",

forward: "4849/http",

},

],

},

transform: {

loadBalancer: {

idleTimeout: 3600,

},

target: {

healthCheck: {

enabled: true,

path: "/keepalive",

protocol: "HTTP",

interval: 5,

healthyThreshold: 2,

timeout: 3,

},

},

},

});

// View Syncer Service

cluster.addService(`view-syncer`, {

cpu: "2 vCPU",

memory: "8 GB",

image: commonEnv.ZERO_IMAGE_URL,

link: [replicationBucket],

health: {

command: ["CMD-SHELL", "curl -f http://localhost:4848/ || exit 1"],

interval: "5 seconds",

retries: 3,

startPeriod: "300 seconds",

},

environment: {

...commonEnv,

ZERO_CHANGE_STREAMER_URI: replicationManager.url,

},

logging: {

retention: "1 month",

},

loadBalancer: {

public: true,

rules: [{ listen: "80/http", forward: "4848/http" }],

},

transform: {

target: {

healthCheck: {

enabled: true,

path: "/keepalive",

protocol: "HTTP",

interval: 5,

healthyThreshold: 2,

timeout: 3,

},

stickiness: {

enabled: true,

type: "lb_cookie",

cookieDuration: 120,

},

loadBalancingAlgorithmType: "least_outstanding_requests",

},

},

});

},

});

Set SST Secrets

Configure SST with your Postgres connection string and Zero Auth Secret.

Note that if you use JWT-based auth, you'll need to change the environment variables in the sst.config.ts file above, then set a different secret here.

npx sst secret set PostgresConnectionString "YOUR-PG-CONN-STRING"

npx sst secret set ZeroAuthSecret "YOUR-ZERO-AUTH-SECRET"

Deploy

npx sst deploy

This takes about 5-10 minutes.

If successful, you should see a URL for the view-syncer service. This is the URL to pass to the server parameter of the Zero constructor on the client.

Guide: Single-Node on Fly.io

Let's deploy the Quickstart app to Fly.io. We'll use Fly.io for both the database and zero-cache.

Setup Quickstart

Go through the Quickstart guide to get the app running locally.

Setup Fly.io

Create an account on Fly.io and install the Fly CLI.

Create Postgres app

INITIALS=aa

PG_APP_NAME=$INITIALS-zstart-pg

PG_PASSWORD="$(head -c 256 /dev/urandom | od -An -t x1 | tr -d ' \n' | tr -dc 'a-zA-Z' | head -c 16)"

fly postgres create \

--name $PG_APP_NAME \

--region lax \

--initial-cluster-size 1 \

--vm-size shared-cpu-2x \

--volume-size 40 \

--password=$PG_PASSWORD

Seed Upstream database

Populate the database with initial data and set its wal_level to logical to support replication to zero-cache. Then restart the database to apply the changes.

(cat ./docker/seed.sql; echo "\q") | fly pg connect -a $PG_APP_NAME

echo "ALTER SYSTEM SET wal_level = logical; \q" | fly pg connect -a $PG_APP_NAME

fly postgres restart --app $PG_APP_NAME

Create zero-cache Fly.io app

CACHE_APP_NAME=$INITIALS-zstart-cache

fly app create $CACHE_APP_NAME

Publish zero-cache

Create a fly.toml file. We'll copy the zero-schema.json into the toml file to pass it to the server as an environment variable.

CONNECTION_STRING="postgres://postgres:$PG_PASSWORD@$PG_APP_NAME.flycast:5432"

ZERO_VERSION=$(npm list @rocicorp/zero | grep @rocicorp/zero | cut -f 3 -d @)

cat <<EOF > fly.toml

app = "$CACHE_APP_NAME"

primary_region = 'lax'

[build]

image = "registry.hub.docker.com/rocicorp/zero:${ZERO_VERSION}"

[http_service]

internal_port = 4848

force_https = true

auto_stop_machines = 'off'

min_machines_running = 1

[[http_service.checks]]

grace_period = "10s"

interval = "30s"

method = "GET"

timeout = "5s"

path = "/"

[[vm]]

memory = '2gb'

cpu_kind = 'shared'

cpus = 2

[mounts]

source = "sqlite_db"

destination = "/data"

[env]

ZERO_REPLICA_FILE = "/data/sync-replica.db"

ZERO_UPSTREAM_DB="${CONNECTION_STRING}/zstart?sslmode=disable"

ZERO_CVR_DB="${CONNECTION_STRING}/zstart_cvr?sslmode=disable"

ZERO_CHANGE_DB="${CONNECTION_STRING}/zstart_cdb?sslmode=disable"

ZERO_AUTH_SECRET="secretkey"

LOG_LEVEL = "debug"

ZERO_SCHEMA_JSON = """$(cat zero-schema.json)"""

EOF

Then publish zero-cache:

fly deploy

Use Remote zero-cache

cat <<EOF > .env

VITE_PUBLIC_SERVER='https://${CACHE_APP_NAME}.fly.dev/'

EOF

Now restart the frontend to pick up the env change, and refresh the app. You can stop your local database and zero-cache as we're not using them anymore. Open the web inspector to verify the app is talking to the remote zero-cache!

You can deploy the frontend to any standard hosting service like Vercel or Netlify, or even to Fly.io!

Deploy Frontend to Vercel

If you've followed the above guide and deployed zero-cache to fly, you can simply run:

vercel deploy --prod \

-e ZERO_AUTH_SECRET="secretkey" \

-e VITE_PUBLIC_SERVER='https://${CACHE_APP_NAME}.fly.dev/'

to deploy your frontend to Vercel.

Explaining the arguments above --

ZERO_AUTH_SECRET- The secret to create and verify JWTs. This is the same secret that was used when deploying zero-cache to fly.VITE_PUBLIC_SERVER- The URL the frontend will call to talk to the zero-cache server. This is the URL of the fly app.

Guide: Multi-Node on Raw AWS

S3 Bucket

Create an S3 bucket. zero-cache uses S3 to backup its SQLite replica so that it survives task restarts.

Fargate Services

Run zero-cache as two Fargate services (using the same rocicorp/zero docker image):

replication-manager

zero-cacheconfig:ZERO_LITESTREAM_BACKUP_URL=s3://{bucketName}/{generation}ZERO_NUM_SYNC_WORKERS=0

- Task count: 1

view-syncer

zero-cacheconfig:ZERO_LITESTREAM_BACKUP_URL=s3://{bucketName}/{generation}ZERO_CHANGE_STREAMER_URI=http://{replication-manager}

- Task count: N

- Loadbalancing to port 4848 with

- algorithm:

least_outstanding_requests - health check path:

/keepalive - health check interval: 5 seconds

- stickiness:

lb_cookie - stickiness duration: 3 minutes

- algorithm:

Notes

- Standard rolling restarts are fine for both services

- The

view-syncertask count is static; update the service to change the count.- Support for dynamic resizing (i.e. Auto Scaling) is planned

- Set

ZERO_CVR_MAX_CONNSandZERO_UPSTREAM_MAX_CONNSappropriately so that the total connections from both running and updatingview-syncers(e.g. DesiredCount * MaximumPercent) do not exceed your database’smax_connections.

- The

{generation}component of thes3://{bucketName}/{generation}URL is an arbitrary path component that can be modified to reset the replica (e.g. a date, a number, etc.). Setting this to a new path is the multi-node equivalent of deleting the replica file to resync.- Note:

zero-cachedoes not manage cleanup of old generations.

- Note:

- The

replication-managerserves requests on port 4849. Routing from theview-syncerto thehttp://{replication-manager}can be achieved using the following mechanisms (in order of preference):- An internal load balancer

- Service Connect

- Service Discovery

- Fargate ephemeral storage is used for the replica.

- The default size is 20GB. This can be increased up to 200GB

- Allocate at least twice the size of the database to support the internal VACUUM operation.

Guide: $PLATFORM

Where should we deploy Zero next?? Let us know on Discord!